AI deepfakes are here to stay. Spotting them is a skill you’ll need to master

AI can make anyone look like they are doing anything. Photo: Getty

Whenever tragedy or conflict strikes, social media users typically rush to share photos and videos from the events.

But some tricky use of artificial intelligence (AI) means many might be unwittingly viewing and sharing faked content.

With AI technology constantly improving, experts warn not to automatically trust everything you see online is real.

Photos apparently showing French protesters hugging police kitted out in riot gear were recently circulated on Twitter.

Over the last few weeks, France has seen huge protests against the government’s plan to raise the retirement age from 62 to 64.

But the presence of an extra finger and an ear abnormality clued in fellow Twitter users to the fact the images were generated by AI.

Tweet from @ninaism

The images’ source, Twitter user @webcrooner, quickly copped to the trickery when called out by other users, apparently having the goal of raising awareness around AI-generated images.

“Don’t believe everything you see on the internet,” reads an English translation of @webcrooner’s follow-up tweet, which also featured an AI-generated image of an officer hugging a stuffed bear.

“On my tweet yesterday the proof of Fake was obvious have you ever seen a [special mobile French police force] comforting a protester in France?”

But AI-generated content does not always feature such easily spotted errors. And its ability to deceive is only going to improve.

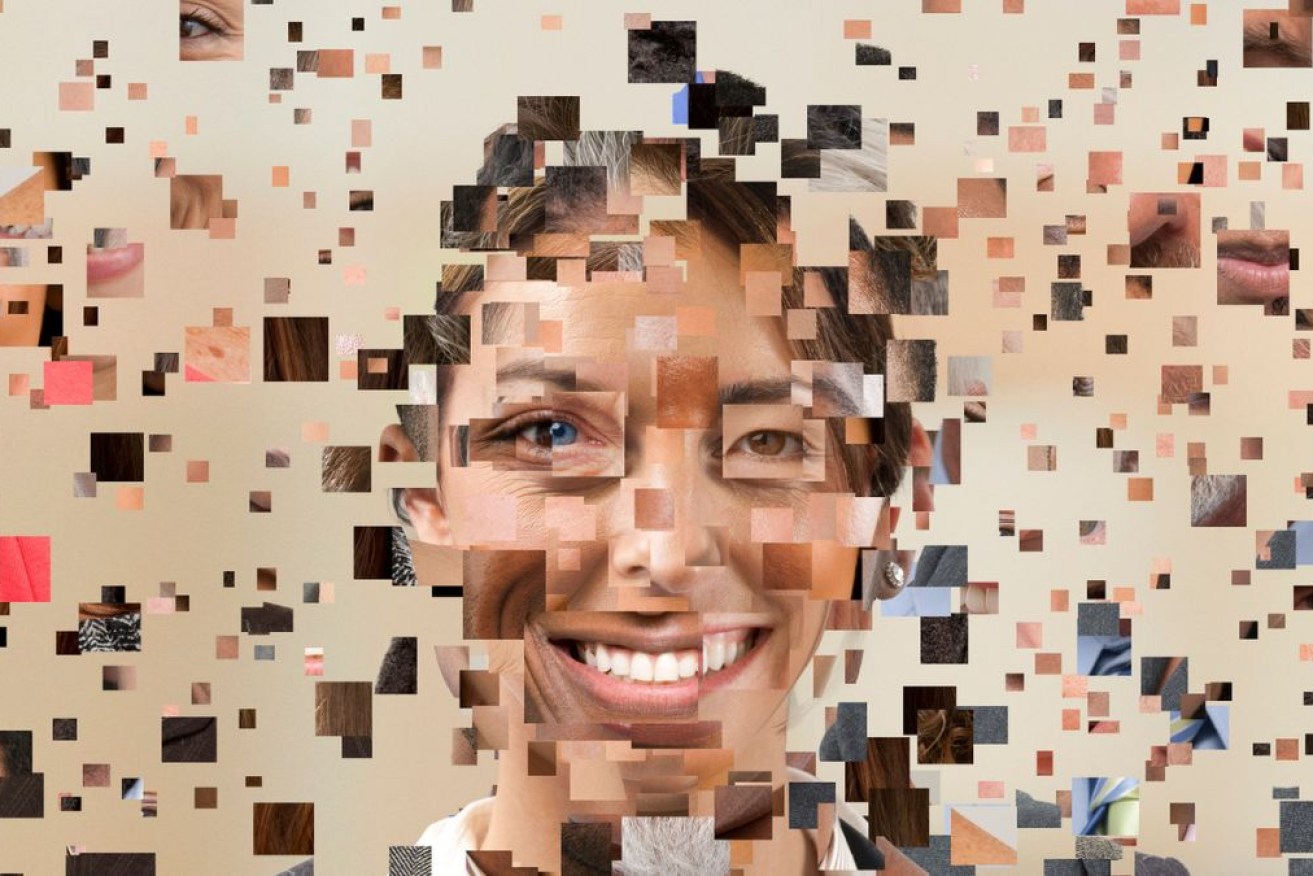

Signs and tells

Rob Cover, RMIT University professor of digital communication, said while AI is getting better, there’s usually something that feels “off” when looking at an AI-generated image or video.

“The signs are usually, there’s something slightly odd about the face, the skin might be just a little too smooth or not have enough … natural shadowing,” he said.

Hair also is often a big giveaway, especially facial hair, he said.

Another key tool to use to spot a fake is context.

Professor Cover used the example of deepfake porn featuring public figures such as Meghan Markle.

“It’s the context … ‘Would she be doing this?’ is the first thing. It is utterly out of character,” he said.

“I often recommend that people search for keywords about the video, if they think that it might be authentic [and] which newspapers would have covered the story. Obviously, a story like that would have been covered by every major newspaper if it was real.

“If we spend the time to do some research around it, then we’re usually a lot better off drawing on our public knowledge and our collective intelligence.”

Film and Journalism Professor Mark Andrejevic, of Monash University’s School of Media, gave the example of videos posted by the purported news outlet Wolf News – which turned out to feature completely AI-generated avatars posing as news anchors.

The videos of the fake news anchors were distributed by pro-China bot accounts on Facebook and Twitter as part of a reported state-aligned information campaign.

AI-generated news anchors have been used to spread disinformation. Photo: Graphika

This is a significant departure from deepfake videos of the past, which used the faces and voices of real people (and have proved incredibly problematic, especially with the emergence of deepfake porn).

And it’s only going to get harder to sort out the real content from the fake, Professor Andrejevic said.

People without access to databases full of AI images and facial recognition technology are going to have to fall back on researching the content they come across to make sure it traces back to a reliable source, or has been reported by multiple credible news media outlets.

“It speaks to a larger crisis in our systems for adjudicating between true and false,” Professor Andrejevic said.

“We’ve seen the ability that AI systems have to just create content, image content, text content .. .that can pass for reality.

“We live in a world … of digital simulation that’s increasingly powerful, and will likely become increasingly widespread and cheap to manufacture.”

AI not just for the rich and tech-savvy

It’s not just cashed-up governments and tech groups behind the fake content you see online.

AI technology is becoming so simple and easy to access, just about anyone can use it, Professor Cover said.

“We’re seeing new platforms and new software emerging several times a year, and each time it’s better and better,” he said.

“And each time it seems to be easier to use.

“So we’re no longer looking at people needing any kind of professional skill at all, these are very much everyday people who are able to generate amazing deepfake images and video.”

Some popular and easily-accessible deepfake image generators include Reface and DALL-E.

There are also several options available via apps on mobile phones, though many don’t use the term AI or deepfake in app stores, so they are slightly harder to find, Professor Cover said.

Two sides of the coin

There are two major concerns with AI and its role in the spread of false information.

One is perhaps more obvious; the concern that real people could be portrayed doing things that they didn’t do.

This was seen when thousands of people viewed a video spread online in 2021 that apparently showed then-New Zealand Prime Minister Jacinda Ardern smoking cocaine – the video was later proved fake.

A video of Jacinda Ardern apparently doing drugs was circulated widely in 2021. Photo: AFP Fact Check

The second concern is that AI-generated photos and videos may provide a cover for people who have done something they don’t want to admit.

For example, in 2017 then president Donald Trump claimed the infamous tape that featured him bragging about how he could grope women was not real, after previously apologising for his “locker room talk”.

The New York Times reported Mr Trump suggested to a senator that the tape was not authentic, and repeated that claim to an adviser.

“The ability to cast doubt on the images that we have used to hold people accountable also speaks to the way in which the technology might be used to make accountability even harder,” Professor Andrejevic said.

Unfortunately, people are not always as interested in the truth as they are in reinforcing their own worldview.

“It’s hard to tell whether more and more people are being fooled by these images, or whether more and more people are just willing to circulate them because those images reinforce what they believe.”

The way forward

At this point, there is no holding back the development and improving of AI.

But with concern mounting over the expanded capabilities of AI, Professor Cover said people should be “adaptive rather than alarmist”.

“It’s like with other AI issues at the moment, like ChatGPT, there’s a lot of alarmism, ‘How are we ever going to read and write or mark essays at school?’, that kind of thing,” he said.

“The reality is this [reaction] has been the same with every new technological advance for the last 50 years, right back to radio. And we always find a way to adapt to it.

“But my recommendation is that we’re going to have to do this very, very collectively, across a number of sectors: governments, the community, tech people, and so on.”